Introducing LayerDiffuse: Generate images with built-in transparency in one step

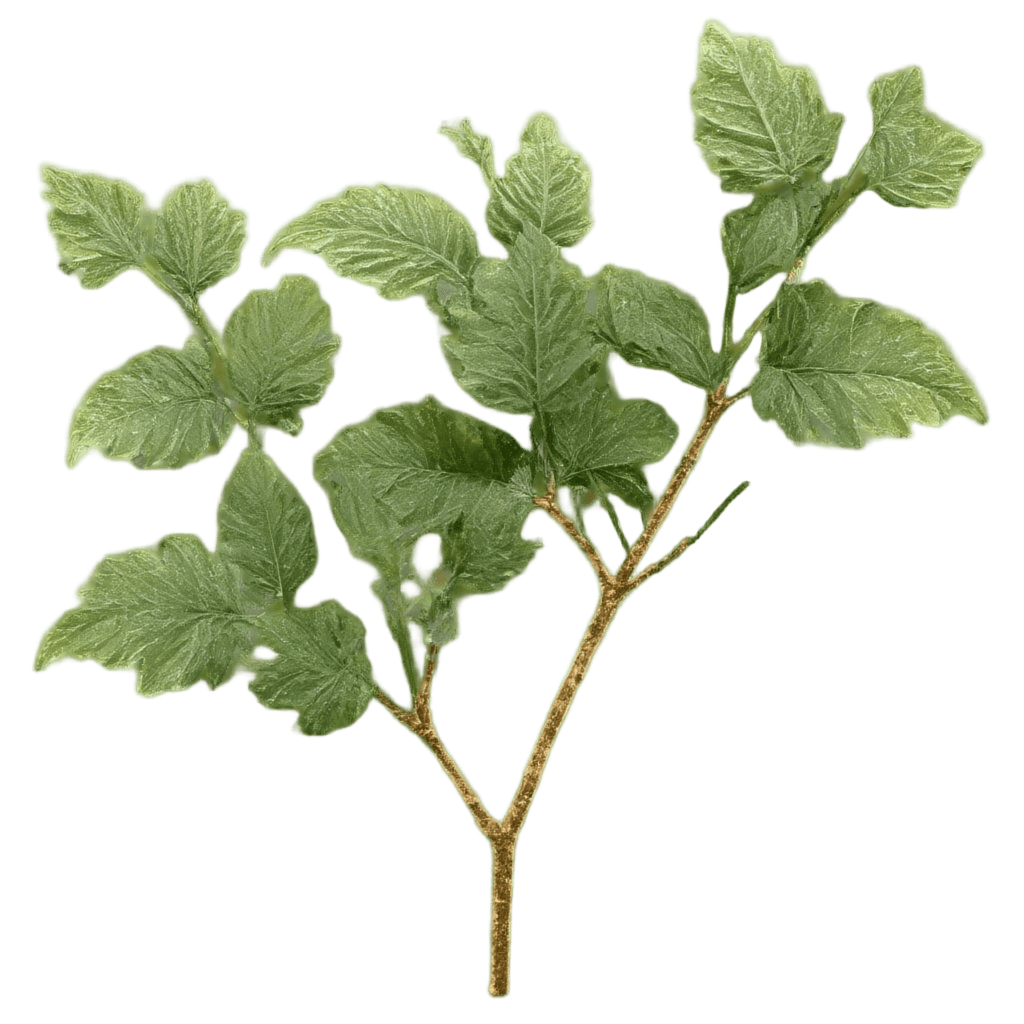

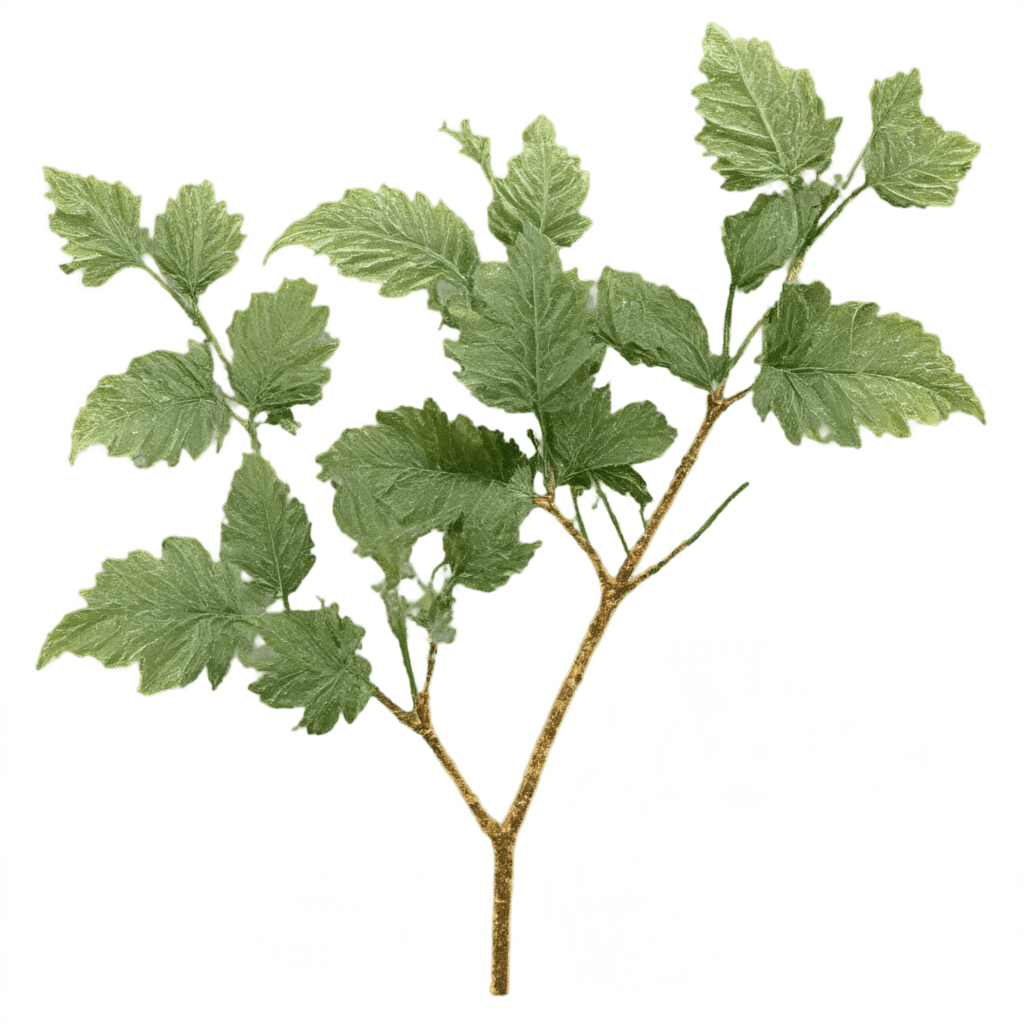

Generate images with built-in transparency directly from your prompts, eliminating the need for background removal in a single, efficient step.

Introduction

Our API now includes LayerDiffuse (paper), a feature that transforms how AI generates images with transparency. You can now create images with built-in alpha channels directly from your prompts, without needing a separate background removal step.

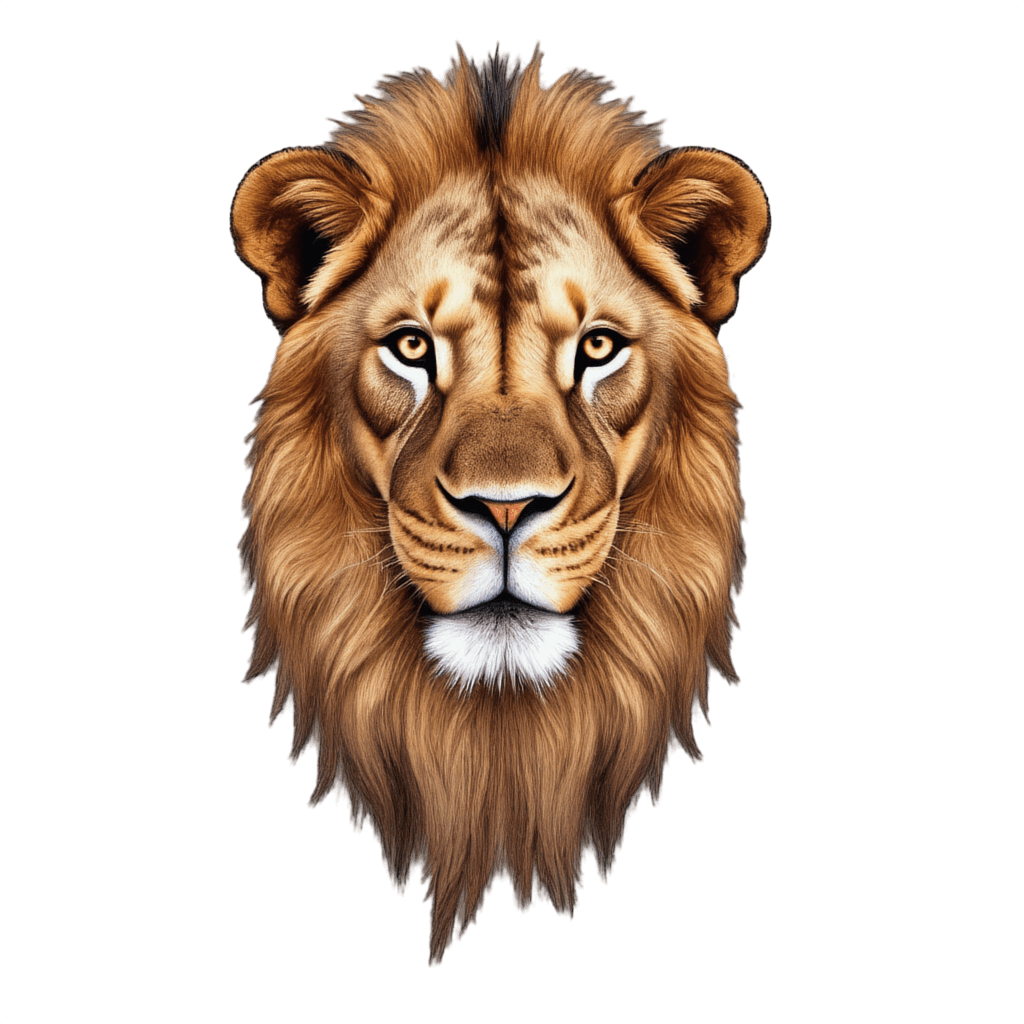

Instead of generating flat images with opaque backgrounds, LayerDiffuse integrates transparency into the generation process itself. This results in cleaner results around fine details, especially around complex edges like hair/fur and semi-transparent materials, making it ideal for web design and and product visuals.

How LayerDiffuse works

The core innovation behind LayerDiffuse is something called latent transparency, a technique that enables pretrained diffusion models to generate RGBA images without modifying their core architecture.

Traditional diffusion models operate in a latent space that only encodes RGB features. They have no concept of transparency. LayerDiffuse solves this by learning how to represent transparency as a latent offset that can be added into the model’s existing latent space. The original structure stays intact, but now the model knows which parts should fade, blend, or disappear. This makes it possible to produce transparent images without retraining the diffusion model itself.

Latent space is the model’s internal representation of an image. It’s a compressed format where it holds abstract information like shape and color without generating actual pixels. Diffusion models refine this internal data step by step before decoding it into a final image. Transparency can be introduced at this stage, before anything is rendered.

Training phase

To make this work, LayerDiffuse introduces a new transparent VAE trained on RGBA images. The encoder learns to handle the RGB content and the alpha channel separately. It applies smart padding to the transparent parts of the image, filling in those empty areas with soft, context-aware colors to avoid harsh transitions at the edges. This helps the model learn clean outlines without artifacts. The alpha channel is then encoded into a compact latent format, which is transformed into a latent offset that guides transparency during generation.

This offset is trained to shift the latent distribution just enough to embed transparency, but not so much that it breaks the model’s generation quality. Only the VAE is trained during this phase, the diffusion model remains frozen.

For multi-layer generation, LayerDiffuse also trains two LoRA modules: one for the foreground layer and one for the background. These modules are small adapters added to the frozen diffusion model. They are trained using a shared attention mechanism so that both layers stay visually consistent.

Inference phase

At generation time, a standard RGB image latent is produced from your prompt using the frozen base model. LayerDiffuse then adds the precomputed latent offset representing the transparency. This results in a combined latent that carries both image content and alpha information.

The modified latent is passed through the diffusion process as usual. If multi-layer generation is enabled, the foreground and background LoRAs guide the model to produce each layer separately while keeping them aligned.

Finally, a custom decoder reconstructs both the RGB image and the alpha channel from the final latent. The output is a true RGBA image, not an RGB image with a mask applied afterward. Transparency is built into the result from the beginning.

This approach gives you transparent image generation without retraining large models. Only the VAE and (optionally) LoRA modules are trained, making it efficient, compatible, and high quality.

Getting started with LayerDiffuse

Using LayerDiffuse is straightforward with our API. Just enable it in your request payload to unlock transparent image generation.

Here's a basic example:

[

{

"taskType": "imageInference",

"taskUUID": "a2728981-a3bd-45ae-b263-a6accde10335",

"outputFormat": "webp",

"positivePrompt": "a cat, detailed fur, studio lighting",

"model": "runware:101@1",

"width": 1024,

"height": 1024

"advancedFeatures": {

"layerDiffuse": true

}

}

]The key part is "layerDiffuse": true inside the advancedFeatures object. That’s all it takes to enable alpha-aware image generation.

Remember you can also manually specify the transparent VAE (runware:120@4) and LoRA (runware:120@2) in your request if you prefer explicit control, instead of relying on the automatic LayerDiffuse setup.

[

{

"taskType": "imageInference",

"taskUUID": "a2728981-a3bd-45ae-b263-a6accde10335",

"outputFormat": "WEBP",

"positivePrompt": "a cat, detailed fur, studio lighting",

"model": "runware:101@1",

"width": 1024,

"height": 1024,

"vae": "runware:120@4",

"lora": [{ "model": "runware:120@2" }]

}

]A few important things to keep in mind:

- Use

pngorwebpas youroutputFormat, as these are the formats that support transparency. - LayerDiffuse currently works only with FLUX Dev models. Support for additional models may be added in the future.

- Resolutions of 768×768 or higher tend to preserve fine transparent details more effectively.

- You can combine LayerDiffuse with other LoRAs to stylize your output, just like any regular generation task.

- You can expect to do some cherry-picking, sometimes more than with base FLUX generations.

For all available options and advanced parameters, check our API reference.

Prompting tips for best results

To get clean transparent outputs with LayerDiffuse, it's important to steer the model away from busy or cluttered scenes.

You can improve results by including terms like these:

- Subject Isolation: isolated subject, cutout, floating object, no background, object-centered.

- Lighting & Photography Style: studio lighting, product photography, neutral lighting, soft shadows, controlled lighting, high key lighting.

- Framing & Perspective: centered, headshot, close-up, top-down view, side view, full body, macro.

- Media & Illustration Style: digital painting, watercolor, pixel art, line art, botanical illustration, storybook style, flat design.

- Materials & Effects: glass, fur, hair, fabric, transparent, reflections, smoke, liquid, semi-transparent material.

Try mixing and matching terms from each group to guide the model more clearly and reduce unwanted background content.

While negative prompts would help suppress background elements even further, they are not available in the FLUX architecture, so it’s especially important to guide the model clearly using positive phrasing.

Playground

Here's a quick demo so you can try LayerDiffuse right in this article. Generate a few transparent images to see how it handles different subjects and edge details. For the full experience with advanced parameters, model selection, batch generation, and complete workflow control, check out the Playground inside your dashboard.

What you can do with LayerDiffuse

LayerDiffuse unlocks use cases where traditional AI image generation falls short, especially when transparency is essential to the final output.

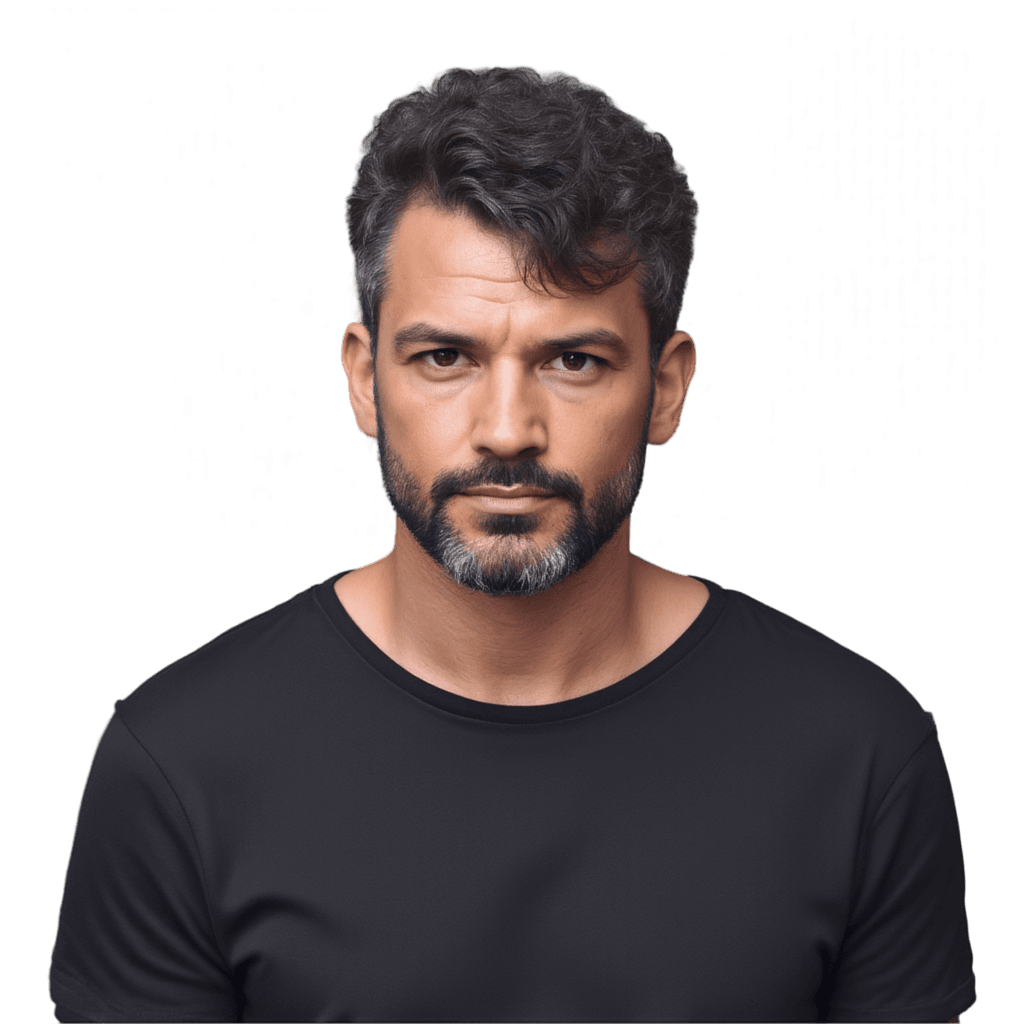

You can generate web-ready assets like icons and logos directly from text prompts. They adapt naturally to light and dark themes, which makes them ideal for interface design and branding systems.

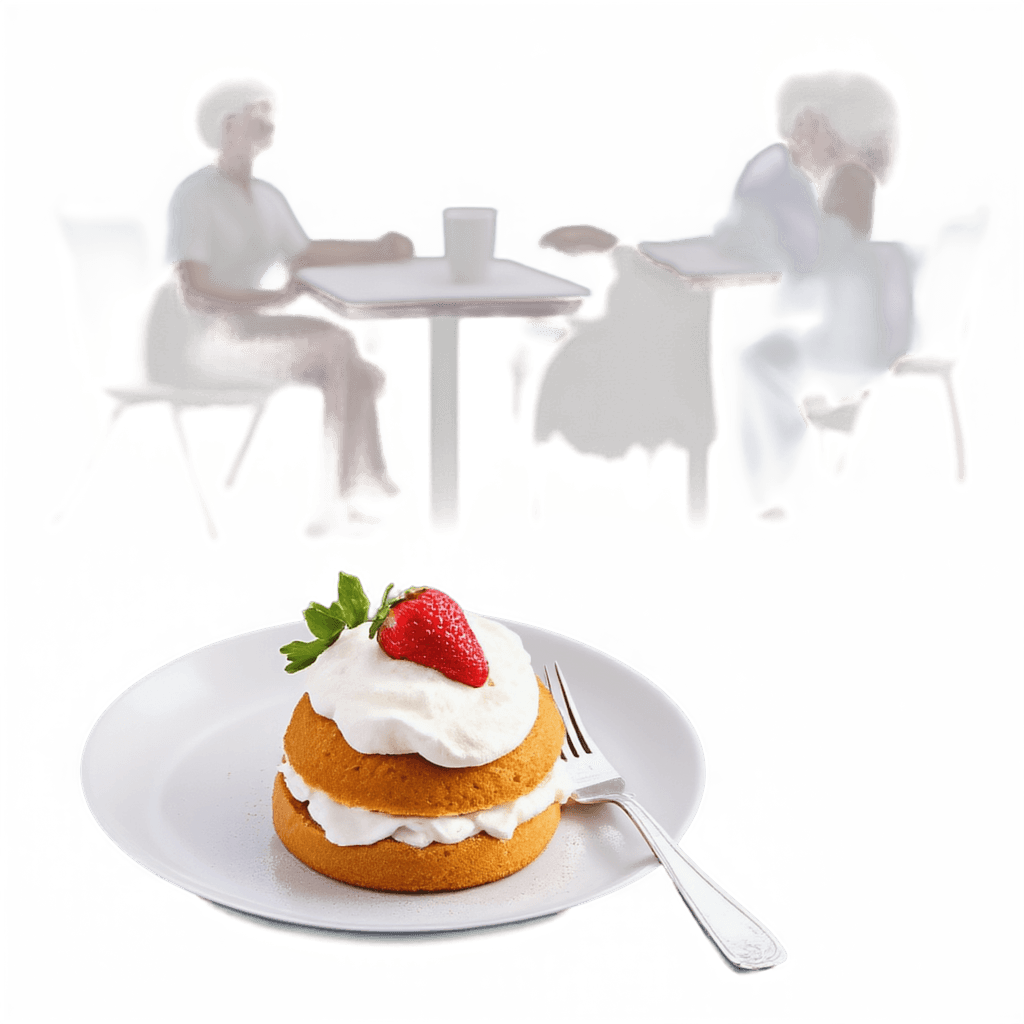

In product workflows, LayerDiffuse makes it easy to create isolated product images that are ready for e-commerce or advertising. There’s no need to remove backgrounds manually or set up a studio-style render. This is especially useful when generating product mockups designed to work across different layouts or marketing environments.

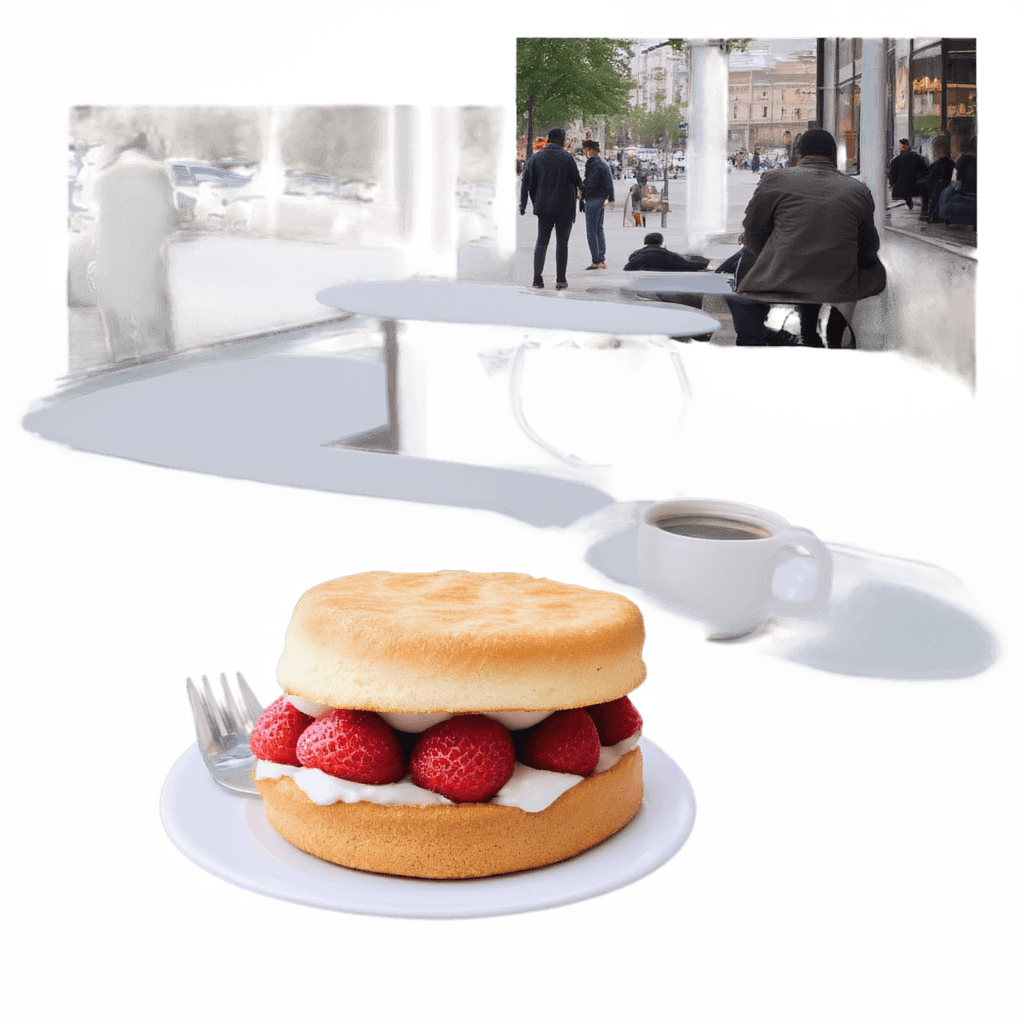

LayerDiffuse is also well suited for educational and creative publishing workflows. It allows you to generate clean, transparent illustrations that can be placed directly into books without manual cleanup. These images remain sharp across layouts and can be easily reused in different contexts, from print to digital.

Finally, it’s a powerful tool for game development and asset pipelines. You can generate transparent UI elements, item icons, effects, or sprite-like objects that are ready to drop into 2D engines. This removes the usual need for hand-editing game art or separating layers in Photoshop.

These examples are just a starting point. You can combine styles or test unusual materials that would normally take hours to clean up by hand. Think beyond isolated objects and try semi-transparent effects or layered illustrations. LayerDiffuse gives you room to experiment without worrying about the cleanup process.

LayerDiffuse vs. background removal

Both tools let you create transparent images, but they serve different purposes in your workflow.

Use LayerDiffuse when you're generating a new image and want transparency built in from the start. It's a one-step process that works only with FLUX models, but gives you clean transparency directly from the prompt with no extra passes or cleanup.

Background removal is more versatile. It works with any model architecture, including photos and previously generated images. Some background removal models also give you fine-grained control to influence how the background is removed. This makes it a better choice when you need flexibility or want to process existing assets.

Conclusion

LayerDiffuse simplifies the way you generate transparent images. Instead of generating first and removing the background afterward, you get transparency built into the image from the start, with no extra steps.

It’s particularly useful when working with difficult subjects like hair, transparent materials (such as glass or smoke), or fine edges that usually require manual cleanup. By making transparency part of the generation process itself, LayerDiffuse helps you get more natural results avoiding extra post-processing. This feature is designed to fit directly into real-world workflows.

You can start using LayerDiffuse now through our API. Check the documentation for setup details, or try it instantly in the Playground.